With the myriad announcements of tablet PCs galore - iPad, HP, and the like at CES and later - a great deal of buzz has hit the blogsphere about the demise of the Golden Age of the "free" Internet. Most interesting, this trend, as it represents vertical divergence while Web based technologies are defying typical trends by converging (Web and TV, mobile telephony and PCs, etc.).

Forrester, notably one

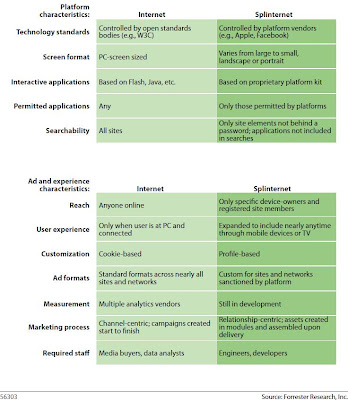

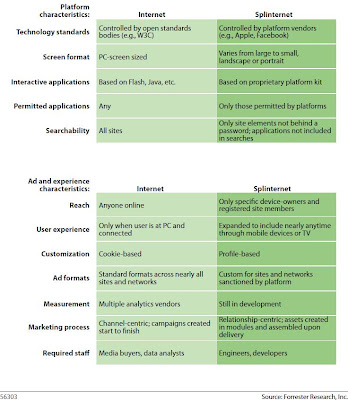

Josh Bernoff, has served up real proof of this new phenomenon, dubbed "The SplinterNet."

He recently published this table, which saliently makes his point.

This, of course, comes on the heels of the much-ballyhooed iPad, which now seems to be following the archetypal Apple product introduction:

1) Ship a beta version minus functionality to come (E.g., Flash capability, still camera, video camera, phone capability); 2) Follow the Microsoft model of letting the consumers find and report the bugs; 3) Deploy an overpriced initial model(s) in the penetration pricing policy/penalty for early adopters; 4) Drop the price after a few months and roll out new versions with the previously withheld functionality.

But I digress. Bernoff counsels us thusly: "As we all gird for the launch of the Apple Tablet, take a moment to step back and realize what all these new devices are doing." Ala Web 3.0 thinking, everything is supposed to be transparent and compatible. As these new devices emerge, compatibility and open source is thrown under the bus. Anyone with a market that researches Web sites using mobile devices knows exactly what this means. To one extent or another, companies practically have to have an entirely different Web version to be mobile compatible for various phones.

Bernoff continues: "Meanwhile, more and more of the interesting stuff on the Web is hidden behind a login and password. Take Facebook for example. Not only do its applications not work anywhere else, Google can't see most of it."

For more than a decade, lots have people have asked "how do we monetize content?" With the iPhone (and now the iPad/iPod), that discussion has expanded to include formerly free apps.

What astonishes me is the current near-complete lack of outrage that many news and content groups (read:

The New York Times) are openly erecting paywalls and cutting off free content.

Platforms and hardware are quietly taking over (again) as the Golden Age of the Internet starts its long tail into legend.

And by the way, the

FCC is worried that insufficient bandwidth exists to accommodate true tablet growth and usage.

Thoughts? E-mail me

here.

Labels: Apple, brand development, convergence, digital future, FCC, Forrester, iPad, net neutrality, Web 3.0, Web trends

As a 55-year-old watching nearly everything change before my eyes - dramatic changes in public/private partnerships across the board, techniques of capital formation, restructuring of healthcare and banking, how people make and buy music, new global views and expectations, online connectivity, and on and on -- I've been casting around a bit to see whether anyone truly has a reliable forecasting model.

As a 55-year-old watching nearly everything change before my eyes - dramatic changes in public/private partnerships across the board, techniques of capital formation, restructuring of healthcare and banking, how people make and buy music, new global views and expectations, online connectivity, and on and on -- I've been casting around a bit to see whether anyone truly has a reliable forecasting model.